This post was originally published on this site

Advanced Micro Devices Inc. is looking to the GitHub of artificial-intelligence developers and to an open-source machine-learning platform spun off from Meta Platforms Inc. to take on Nvidia Corp. in the segment where the $1 trillion chip maker makes such high margins — software.

On Tuesday, AMD

AMD,

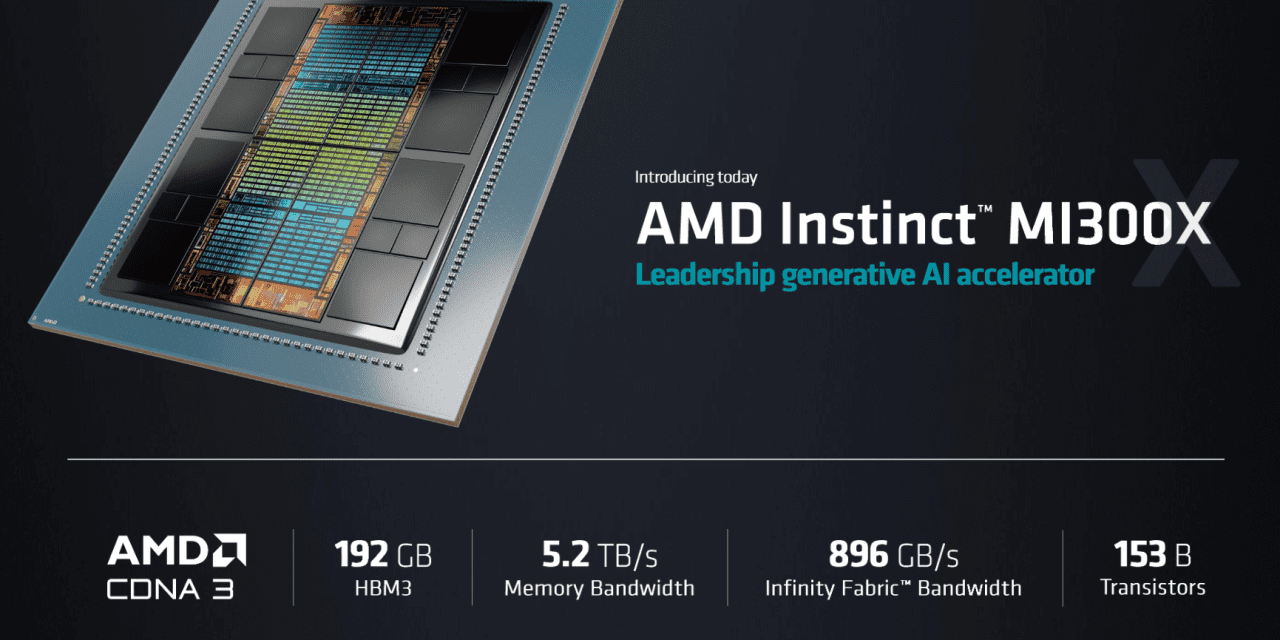

kicked off its version of Nvidia’s March developers conference and launched its new line of data-center chips meant to handle the massive workloads required by AI. While the company’s Instinct MI300X data accelerator graphics-processing unit was a highlight of the launch, AMD also introduced two partnerships: one with AI startup Hugging Face and another with the Linux-based PyTorch Foundation.

Both partnerships involve AMD’s ROCm AI software stack, the company’s answer to Nvidia’s proprietary CUDA platform and application-programming interface. AMD called ROCm an open and portable AI system with out-of-the-box support that can port to existing AI models.

Read: AMD, Nvidia face ‘tight’ budgets from cloud-service providers even as AI grows

Clement Delangue, the CEO of Hugging Face, said his company has been described as “what GitHub has been for previous version of software, but for the AI world.” The six-year-old New York-based AI startup has already received $160.2 million in venture funding from investors like Kevin Durant’s Thirty Five Ventures and Sequoia Capital, according to Crunchbase, giving it an estimated valuation of about $2 billion. GitHub itself was acquired by Microsoft in 2018 for $7.5 billion.

With Hugging Face, users have access to more than half a million shared AI models, such as the image AI Stable Diffusion and most open-source models, Delangue said, in an effort to democratize AI and AI building.

Read: AMD launches new data-center AI chips, software to go up against Nvidia and Intel

“The name of the game for AI is ease of use,” Delangue said at a breakout session at AMD’s event. “This is a challenge for companies to adopt AI.”

So far, companies have been jumping on the OpenAI ChatGPT bandwagon this year or playing with APIs when they could be building their own, Delangue said. The privately held OpenAI, incidentally, is heavily backed by an investment from Microsoft Corp.

MSFT,

“We believe all companies need to build AI themselves, not just outsource that and use APIs,” Delangue said, referring to application programming interfaces. “So we need to make it easier for all companies to train their own models, optimize their own models, deploy their own models.”

Under the partnership, AMD said it will make sure its hardware is optimized for Hugging Face models. When asked how the hardware will be optimized, Vamsi Boppana, head of AI at AMD, told MarketWatch that both AMD and Hugging Face are dedicating engineering resources to each other and sharing data to ensure that the constantly updated AI models from Hugging Face, which might not otherwise run well on AMD hardware, would be “guaranteed” to work on hardware like the MI300X.

In early May, Hugging Face also said it was working with International Business Machines Corp.

IBM,

to bring open-source AI models to Big Blue’s Watson platform, and with ServiceNow

NOW,

on a code-generating AI system called StarCoder. ServiceNow is also partnering with Nvidia on generative AI.

Meanwhile, PyTorch is a machine-learning framework that was originally developed by Meta Platforms Inc.’s

META,

Meta AI and that is now part of the Linux Foundation.

AMD said PyTorch will fully upstream the ROCm software stack and “provide immediate ‘day zero’ support for PyTorch 2.0 with ROCm release 5.4.2 on all AMD Instinct accelerators,” which is meant to appeal to those customers looking to switch from Nvidia’s software ecosystem.

Gaining that software usage is important. As many analysts covering Nvidia have noted, the company’s closed proprietary software moat, and its roughly 80% of the data-center chip market, is what’s supporting the buy-grade ratings from 86% of the analysts who cover the stock.

In late May, Nvidia forecast gross margins of about 70% for the current quarter, while earlier in the month, AMD forecast gross margins of about 50%. AMD Chief Financial Officer Jean Hu said that the company’s strongest gross margins were in the data-center and embedded businesses, but that any additional improvements would have to come from the lower-margin PC segment.

One of the reasons data-center products contribute to higher margins has a lot to do with how much Nvidia’s software ecosystem is required to run the hardware supporting exponentially growing AI models, Nvidia CFO Colette Kress told MarketWatch in an interview in May.

Not only do data-center GPUs require Nvidia’s basic software, but Nvidia also plans to sell enterprise-AI services, along with creative platforms like Omniverse, to begin quickly making money on the AI arms race.