This post was originally published on this site

Elon Musk recently told a Financial Times conference: “I think we are actually quite close to achieving self driving at a safety level that is better than human.” By “self-driving,” he meant “autonomy,” and his “best guess” is that “we’ll get there this year.”

Autonomy is supposed to make rides in Tesla’s

TSLA,

planned robotaxis affordable and safe. Musk predicted that his company “will get far in excess of the safety level of human … ultimately probably a factor of 10 safer than a human as measured by the probability of injury.”

Don’t bet on it.

If yet another Musk prediction proves too optimistic, no one will be surprised – least of all Musk. But the problem here is not excessive optimism about the pace of improvement. So-called “autonomous vehicles” are not just taking longer to develop than expected. They are a dead end.

Skeptics about AVs have a decade of failed promises to point to. AV proponents’ reply is that the forecasts were just too optimistic about the pace toward the destination. The rate of progress may be disappointing, they admit – but we’ll get there.

But we have far more than a decade of experience to learn from, and this experience reveals persistent biases that inflate expectations.

We have been promised that engineering will nearly eliminate motor vehicle crashes for 90 years. In 1934, the leading American expert in the field, Miller McClintock, promised that state-of-the-art highways can be “built in such a way that accidents will be impossible.” With access controls, grade separations, median strips, and shoulders, he wrote, “foolproof highways” are possible. McClintock estimated that such roads would reduce fatalities by 98% – enough to justify a promise of “complete permanent safety.” In the press, McClintock’s claims were widely accepted as fact. In 1937 the Chicago Tribune made “deathproof highways” the No. 1 plank in its editorial platform.

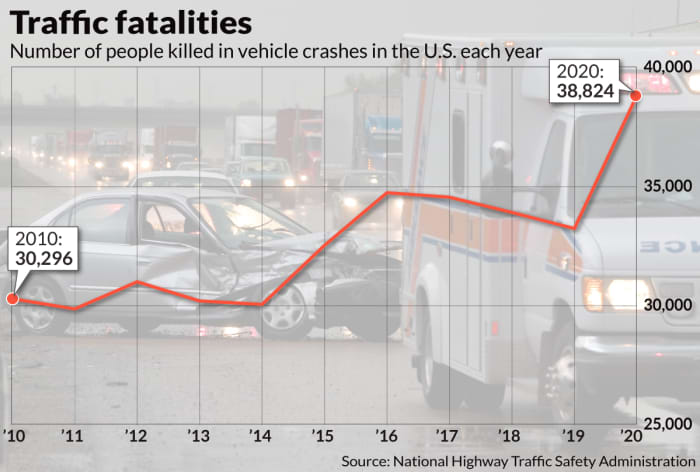

Limited-access highways are much safer than other roads, but nowhere near as safe as McClintock promised. Had he been right, the death toll today would be too small to give AV promoters their favorite moral argument.

Musk is promising to achieve the dramatic gains through incalculably more sophisticated technology – tech that takes over entirely from the human driver. This obvious fact, however, obscures the same elementary error in both forecasts, and in many others over the interceding decades. To arrive at their conclusions, the promise makers subtract all the hazards that make the status quo dangerous – without adding in all the major new hazards that the innovation introduces.

On limited-access highways, most of the new hazards were due the speeds that such roads invite. If no driver exceeded 20 mph, deaths on them would indeed fall to near zero. But highways justify their cost by permitting much faster driving – at speeds that can be deadly. Similarly, the technology in AVs can make them much safer, but the faster the vehicle travels, the less the tech can do.

Sensors may sense almost instantaneously, but like humans, AVs can need time to interpret unusual objects with high reliability, and they must track moving objects over time to predict how they are likely to behave. At low speeds, such problems are manageable. A slow AV has time to interpret, track, predict and respond – and if it still fails, a low-speed collision may be minor. But to attract paying riders in large numbers, AVs would have to offer speed. And at speed, AVs’ errors will be far more frequent and much more lethal.

The comparison with “foolproof highways” has more to offer. People who drive on limited-access highways have to drive on ordinary roads and streets to get to and from them. The roads’ safety benefit cannot extend everywhere, and safer highways can increase the casualties on the roads around them just by attracting more driving.

Island Press

Similarly, there is no direct path toward an unmixed AV future in which all vehicles on the roads are automated. There must be many years of mixed AVs and conventional vehicles. Conventional cars can be connected to a network, making them easier for AVs to monitor, but mixed traffic will remain so difficult that AVs will have to be either hazardous or slow.

If AVs are slow, this will prolong the transition (why ride in a slow AV?) and introduce new safety hazards as frustrated human drivers try to pass slow AVs.

Finally, AVs will continue to disappoint because they are misnamed in a way that inflates what we expect of them. “Autonomous” predisposes people to think of AVs as unbiased hyperrational beings with a “self” that can drive, and enough discretion to exercise autonomy. The misperception is apparent in Musk’s choice of words: “self-driving … that is better than human.” In developing an AV, engineers’ goal is to ensure that everything the vehicle does is what its human developers and operators want it to do. Unpredictable behavior by the vehicle must be extirpated. The goal in AV development is to ensure that humans so thoroughly determine how the vehicle performs that the vehicle itself can have zero autonomy.

AVs are in fact human-driven vehicles, though the operators are not in the vehicle, and they control it through programs rather than through a direct physical interface. These human operators must be biased – otherwise they will have no paying human customers. Except in niche applications or in services operated at a loss (such as Alphabet’s

GOOG,

GOOGL,

Waymo, and perhaps Tesla’s eventual robotaxis), they must not value safety so much that the vehicle never exceeds 20 mph. They must also value the customer’s experience more than they value the preferences of others outside the vehicle—if they don’t, they will lose their customers.

These human biases are business imperatives, and they come at a cost to safety that precludes the kind of safety gains that Musk and other AV promoters have predicted. AVs cannot become common until they can earn more revenue than they cost. And they can’t do that and prioritize safety over customer satisfaction.

AV promoters have not been too optimistic about AVs’ pace of development. They have been wrong about what AVs have to offer.

Peter Norton is an associate professor of history in the Department of Engineering and Society at the University of Virginia in Charlottesville and the author of “Autonorama: The Illusory Promise of High-Tech Driving”.

Now read: Elon Musk called ESG a scam — did the Tesla chief do investors a favor?